Pain

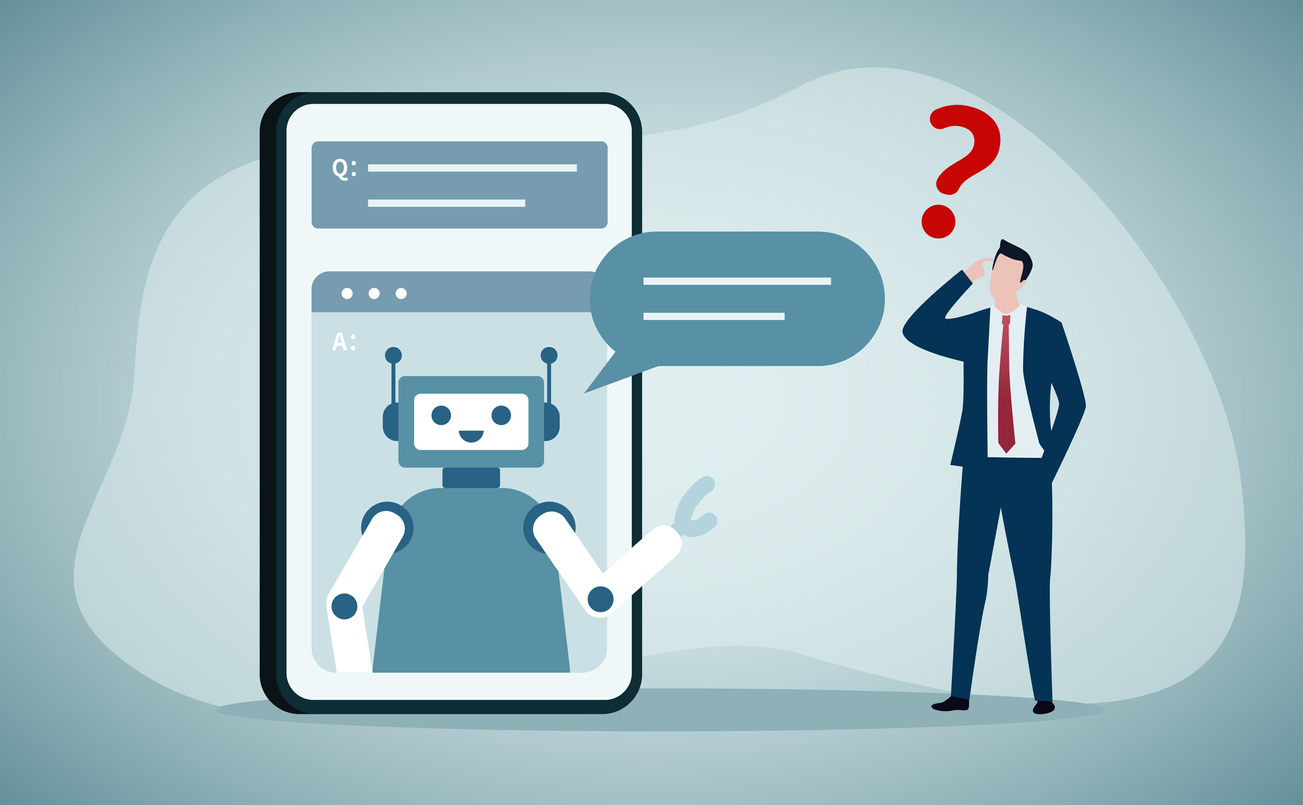

The Future of ChatGPT in Health Care

What is ChatGPT?

Chat Generative Pre-Trained Transformer, also known as ChatGPT, is a chatbot that was created by OpenAI. It is powered by artificial intelligence and can mimic human language after it is fed already-existent writing material. Although sometimes unethical, ChatGPT has been used to write essays, articles, resumes, social media posts, code, recipes, etc.

There are various debates on the use of ChatGPT, its ethics, safety, and impact on how humans communicate and create content. Some speculate that students may become reliant on ChatGPT for their school assignments, rendering writing assignments obsolete. There have also been arguments that ChatGPT offers a variety of beneficial uses. How does this chatbot fit into the world of healthcare?

Bedside manner

ChatGPT excels at providing empathetic responses to questions. In a recent study, evaluators posed several questions to the chatbot that had also been answered by human medical professionals. They compared the responses and found that the evaluators preferred the responses of the chatbot an estimated 79 percent of the time. ChatGPT received higher marks for empathy.

Quick and simple responses

The chatbot is able to generate quick responses to questions. This can allow health care providers time to concentrate on examinations, tests, etc., while ensuring accurate responses are given. ChatGPT is also able to translate technical terms and medical jargon into language that is easy to understand, which helps with following instructions.

Diagnostics

Accurate and reliable symptom checkers could be developed with the help of the chatbot. This allows individuals to receive better guidance on what the next steps should be when experiencing symptoms. With certain warning signs, ChatGPT can be triggered to suggest talking to a physician, or can provide certain resources.

Medical education

ChatGPT can use its resources to educate medical students and providers. The bot can give updates on developments in the medical field and assess the clinical skills of students. The chatbot’s ability to provide quick and accurate resources helps to increase medical literacy.

Patient monitoring

The use of ChatGPT with notes provides an excellent tool for record-keeping. The bot can generate summaries of patient interactions, medical history, symptoms, diagnosis, and treatment plans. ChatGPT can also give medication reminders, instructions, and information about side effects and drug interactions.

Drawbacks

Concerns exist due to infringement on copyright laws, medico-legal complications, and a potential of prejudices that could present with the generated content. ChatGPT is also known to occasionally produce false data in its responses, leading to the need to double-check for accuracy. It is important to address any inaccuracies, transparencies and biases that might arise from the use of AI.

The use of AI-generated images constitutes scientific misconduct and should be avoided. Patient privacy is a concern with the use of AI. There is also worry that students will use the bot to write essays and complete assignments. There are concerns of authorship and accountability with regard to the content produced by ChatGPT.

Conclusion

Ultimately, ChatGPT could be a valuable resource for health care professionals, medical students, and patients; however, it does not replace human interaction. Special attention should be given with regard to copyright law, privacy, authorship, and misconduct.

PainScale does not use ChatGPT, in any way, in the creation, development or writing of articles or other written content on the site.

Additional sources: News-Medical.net, The Hastings Center, and Vice